Microsoft CEO Concerned: Rising Reports Of AI-Induced Psychosis

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit Best Website now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Microsoft CEO Concerned: Rising Reports of AI-Induced Psychosis Spark Urgent Debate

Microsoft CEO Satya Nadella has expressed growing concern over emerging reports linking prolonged AI interaction to symptoms resembling psychosis. This startling development is prompting urgent discussions within the tech industry and raising critical questions about the ethical implications of rapidly advancing artificial intelligence. While AI continues to revolutionize various sectors, the potential for negative psychological impacts is demanding immediate attention.

The concerns stem from a surge in anecdotal evidence and preliminary research suggesting a correlation between excessive AI engagement and the onset of psychosis-like symptoms. These symptoms, according to reports, include hallucinations, delusional thinking, and a distorted sense of reality, particularly among individuals heavily reliant on AI chatbots and virtual assistants.

<h3>The Nature of AI-Induced Psychosis: A Complex Issue</h3>

The exact mechanisms through which prolonged AI interaction might trigger psychosis-like symptoms remain largely unknown. However, several theories are emerging. One hypothesis suggests that the highly realistic and persuasive nature of advanced AI models can blur the lines between reality and simulation, potentially leading to a breakdown in cognitive processes. Another theory points to the addictive qualities of some AI interactions, with excessive engagement potentially disrupting normal brain function and contributing to mental health issues.

It's crucial to distinguish between genuine psychosis and temporary disorientation or confusion caused by AI. While the latter is more common, the potential for AI to exacerbate pre-existing mental health conditions or trigger full-blown psychosis warrants serious investigation. The lack of robust, large-scale studies currently hinders a comprehensive understanding of this phenomenon.

<h3>The Call for Regulation and Responsible AI Development</h3>

Nadella's concern underscores the need for a more responsible approach to AI development and deployment. He has reportedly called for increased collaboration between tech companies, mental health experts, and policymakers to establish guidelines and safeguards. This includes:

- Increased transparency: AI systems should be designed to be more transparent about their limitations and potential for misinterpretation.

- Improved safety protocols: Development should prioritize safety features to mitigate the risk of negative psychological impacts.

- Enhanced user education: Users should be educated about the potential risks of excessive AI engagement and encouraged to maintain a healthy balance in their interactions.

- Further research: Significant investment in research is needed to understand the long-term effects of AI interaction on mental health.

This isn't just a matter of individual user safety; it's a societal issue. The widespread adoption of AI necessitates a proactive approach to mitigate potential harms. The ethical considerations surrounding AI are becoming increasingly central to the conversation, demanding a multi-faceted solution involving technological advancements and societal awareness.

<h3>The Future of AI and Mental Well-being: A Collaborative Effort</h3>

Addressing the potential for AI-induced psychosis requires a collaborative effort from all stakeholders. Tech companies have a responsibility to prioritize user safety in their design and development processes. Researchers need to conduct rigorous studies to understand the underlying mechanisms and develop effective mitigation strategies. And policymakers must create a regulatory framework that balances innovation with the protection of public health. The future of AI depends on our ability to navigate these complex challenges responsibly. Ignoring the potential risks could have severe consequences. What are your thoughts on this developing concern? Share your opinions in the comments below.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Microsoft CEO Concerned: Rising Reports Of AI-Induced Psychosis. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

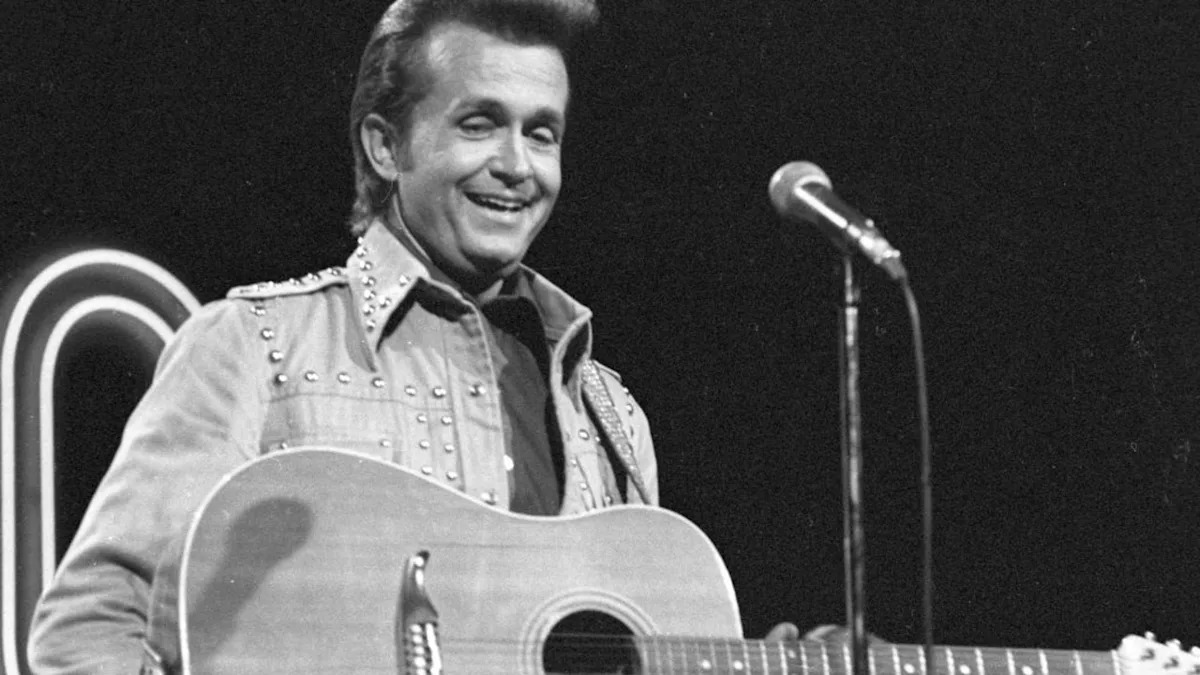

Country Music Legend Cancels Shows After Freak Accident At 87

Aug 22, 2025

Country Music Legend Cancels Shows After Freak Accident At 87

Aug 22, 2025 -

Why The Recent Outpouring Of St Georges And Union Jack Flags

Aug 22, 2025

Why The Recent Outpouring Of St Georges And Union Jack Flags

Aug 22, 2025 -

Epping Ruling Practical And Political Headaches For The Uk Home Office

Aug 22, 2025

Epping Ruling Practical And Political Headaches For The Uk Home Office

Aug 22, 2025 -

Romano Vs Alvarado The Fight For A Spot In The Phillies Packed Bullpen

Aug 22, 2025

Romano Vs Alvarado The Fight For A Spot In The Phillies Packed Bullpen

Aug 22, 2025 -

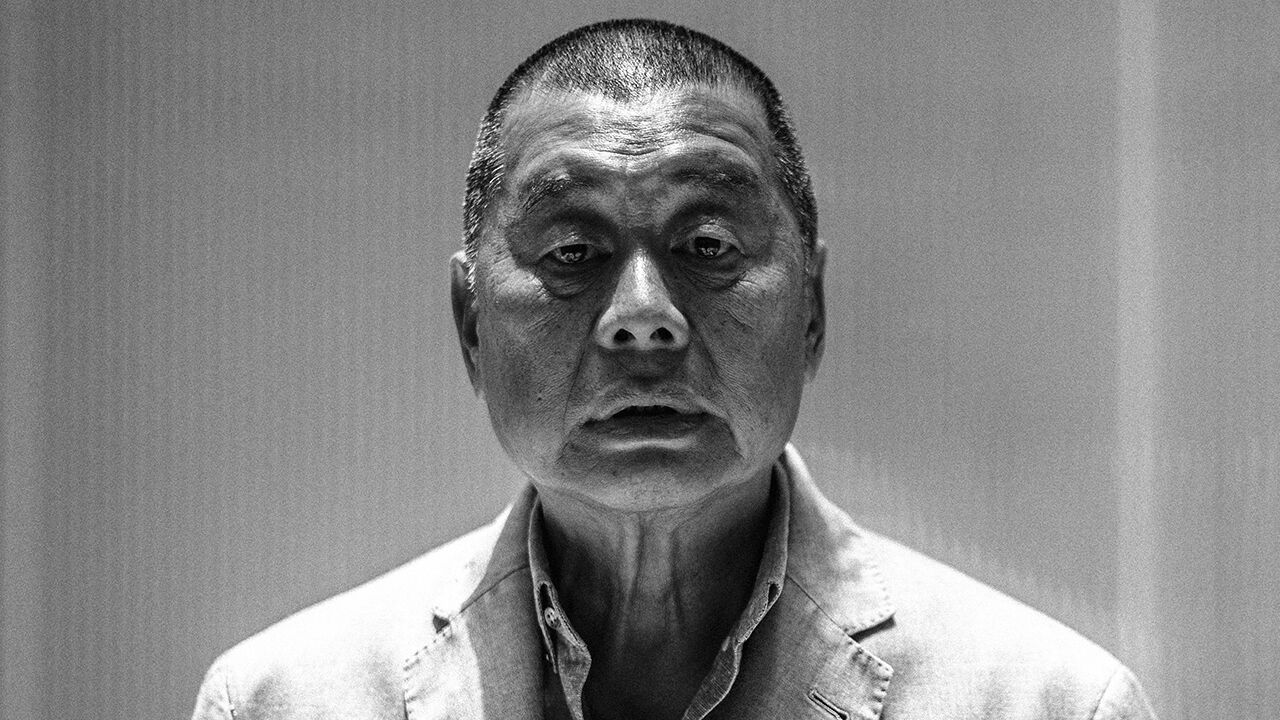

Hong Kong Courtroom Dramas Realism Fiction And Social Commentary

Aug 22, 2025

Hong Kong Courtroom Dramas Realism Fiction And Social Commentary

Aug 22, 2025

Latest Posts

-

Cowboy Builders Tricks Avoiding Costly Mistakes When Hiring A Contractor

Aug 22, 2025

Cowboy Builders Tricks Avoiding Costly Mistakes When Hiring A Contractor

Aug 22, 2025 -

Why Are So Many St Georges And Union Jack Flags Being Flown

Aug 22, 2025

Why Are So Many St Georges And Union Jack Flags Being Flown

Aug 22, 2025 -

Decoding The Gcse 9 1 Grading System A 2025 Guide

Aug 22, 2025

Decoding The Gcse 9 1 Grading System A 2025 Guide

Aug 22, 2025 -

Prevision Meteorologica Miami Temperatura Lluvia Y Viento

Aug 22, 2025

Prevision Meteorologica Miami Temperatura Lluvia Y Viento

Aug 22, 2025 -

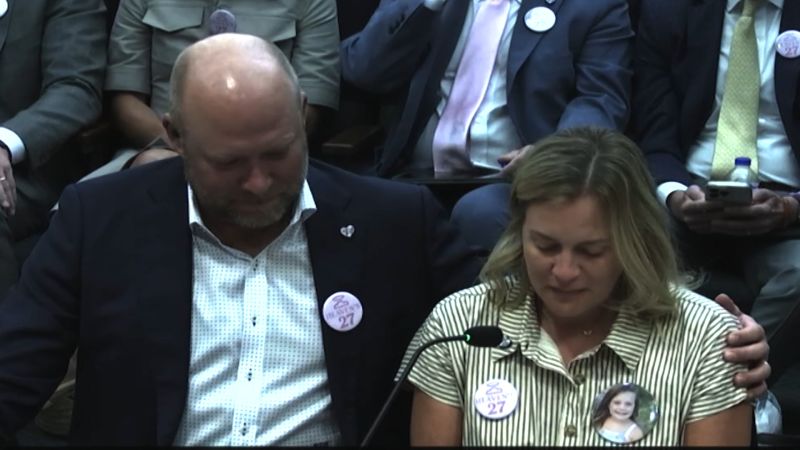

Devastating Camp Mystic Flood Parents Share Childrens Trauma

Aug 22, 2025

Devastating Camp Mystic Flood Parents Share Childrens Trauma

Aug 22, 2025