Is ChatGPT's Intelligence Overstated? A Case Study In Map Labeling

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit Best Website now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Is ChatGPT's Intelligence Overstated? A Case Study in Map Labeling

The meteoric rise of ChatGPT has captivated the world, showcasing impressive language capabilities and sparking debates about artificial general intelligence (AGI). But is the hype justified? Recent experiments, particularly concerning seemingly simple tasks like map labeling, suggest that ChatGPT's intelligence, while undeniably advanced, might be significantly overstated in certain areas. This case study explores the limitations revealed through a seemingly straightforward task: accurately labeling geographical features on a map.

The Map Labeling Experiment: A Simple Task, Complex Results

Several researchers and independent users have conducted informal experiments using ChatGPT for map labeling. The premise is simple: provide ChatGPT with coordinate data and descriptions of geographical features (e.g., "mountain peak at 34.0522° N, 118.2437° W, elevation 1000m") and ask it to generate accurate labels. The results, however, have been far from perfect, revealing a surprising level of inaccuracy and inconsistency.

Inconsistencies and Errors Revealed:

- Inaccurate Labeling: ChatGPT frequently mislabeled features, either misidentifying the type of feature (e.g., labeling a lake as a river) or providing incorrect names. This wasn't limited to obscure locations; even well-known landmarks sometimes received inaccurate labels.

- Hallucination of Data: In several instances, ChatGPT "hallucinated" data, generating labels for features that didn't exist at the specified coordinates. This highlights a significant weakness in its fact-checking abilities, a crucial aspect of reliable information processing.

- Inconsistent Formatting: The formatting of labels was also inconsistent, sometimes providing overly verbose descriptions while omitting crucial information in others. This points to a lack of robust output structuring capabilities.

Why These Errors Matter:

These seemingly minor errors have significant implications. While labeling a map might seem trivial, it represents a fundamental aspect of data processing and knowledge representation – capabilities crucial for any system aiming for AGI. The inability to accurately and consistently perform this seemingly simple task underscores the limitations of ChatGPT's current capabilities.

Beyond Map Labeling: A Broader Perspective

This case study isn't intended to diminish ChatGPT's impressive achievements in natural language processing. It's crucial to remember that it's a large language model (LLM), trained to predict the next word in a sequence, not a system designed for perfect reasoning or factual accuracy. However, the map labeling experiment highlights the crucial difference between impressive language fluency and true understanding.

The results challenge the narrative often surrounding LLMs, suggesting that focusing solely on impressive outputs without rigorously testing their underlying knowledge and reasoning capabilities can lead to an overestimation of their true intelligence. Future development should prioritize robust fact-checking mechanisms and improved knowledge representation to bridge this gap.

Further Research & Conclusion:

Further research is needed to explore the limitations of LLMs in various tasks demanding accurate information retrieval and logical reasoning. While ChatGPT represents a significant step forward in AI, the map labeling experiment serves as a valuable reminder that the path to AGI is long and complex, requiring a deeper understanding of knowledge representation, reasoning, and error correction beyond simply generating grammatically correct and fluent text. The hype surrounding AI should be tempered with critical evaluation and a focus on robust testing methodologies. What are your thoughts on this? Share your perspectives in the comments below!

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Is ChatGPT's Intelligence Overstated? A Case Study In Map Labeling. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

12 Highlights You Wont Want To Miss Alaska State Fair Weekend 1

Aug 16, 2025

12 Highlights You Wont Want To Miss Alaska State Fair Weekend 1

Aug 16, 2025 -

Putins Peace Proposal A Look Back At Vj Day And The Future Of Global Relations

Aug 16, 2025

Putins Peace Proposal A Look Back At Vj Day And The Future Of Global Relations

Aug 16, 2025 -

Alaskans React Anger And Hope Amid Trump And Putin Visits

Aug 16, 2025

Alaskans React Anger And Hope Amid Trump And Putin Visits

Aug 16, 2025 -

Wwiis Nanjing Massacre A Continuing Source Of Tension Between China And Japan

Aug 16, 2025

Wwiis Nanjing Massacre A Continuing Source Of Tension Between China And Japan

Aug 16, 2025 -

Reno Casino Shooting Police Bodycam Footage Reveals Dramatic Confrontation

Aug 16, 2025

Reno Casino Shooting Police Bodycam Footage Reveals Dramatic Confrontation

Aug 16, 2025

Latest Posts

-

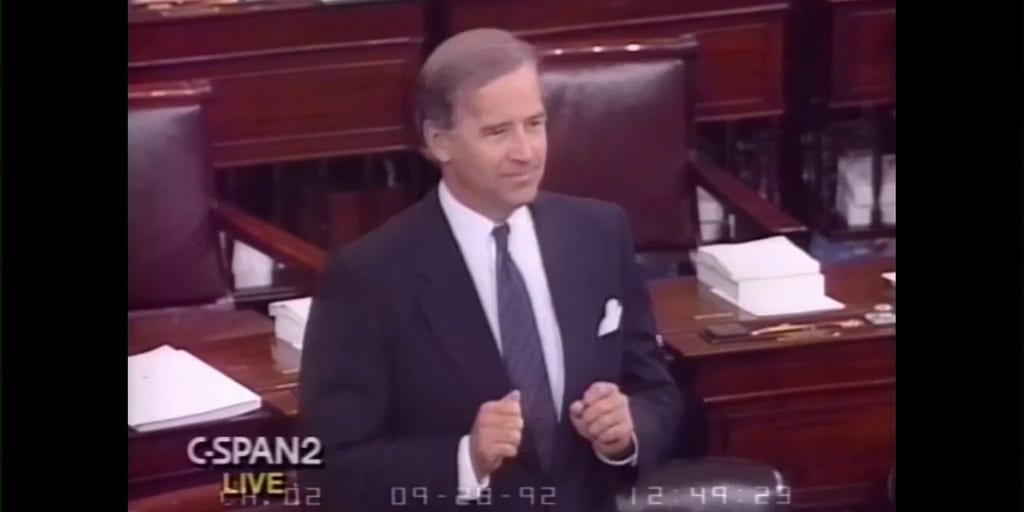

Thirty Years Later Examining Bidens 1992 Crime Concerns In Washington D C

Aug 18, 2025

Thirty Years Later Examining Bidens 1992 Crime Concerns In Washington D C

Aug 18, 2025 -

Us China Tensions Flare The Role Of A Hong Kong Media Mogul

Aug 18, 2025

Us China Tensions Flare The Role Of A Hong Kong Media Mogul

Aug 18, 2025 -

What The No Ceasfire No Deal Summit Means For The Us Russia And Ukraine

Aug 18, 2025

What The No Ceasfire No Deal Summit Means For The Us Russia And Ukraine

Aug 18, 2025 -

Delta Blues Culture Preserving Heritage In A Mississippi Town

Aug 18, 2025

Delta Blues Culture Preserving Heritage In A Mississippi Town

Aug 18, 2025 -

Americans Abandon Trump Cnn Data Pinpoints The Decisive Factor

Aug 18, 2025

Americans Abandon Trump Cnn Data Pinpoints The Decisive Factor

Aug 18, 2025