/dev: Preventing Abuse: New Tactics To Ban Bots And Boosters In 2025

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit Best Website now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

/dev: Preventing Abuse: New Tactics to Ban Bots and Boosters in 2025

The digital landscape is constantly evolving, and with it, the sophistication of malicious bots and account boosters. In 2025, protecting online platforms from abuse requires a multi-pronged approach that goes beyond simple CAPTCHAs. This article explores the cutting-edge tactics developers are employing to combat these threats and create safer online environments.

The Ever-Evolving Threat of Bots and Boosters

Bots and boosters represent a significant threat to online communities and platforms. From spamming and manipulating user engagement metrics to orchestrating coordinated attacks, their impact can be devastating. These malicious actors are constantly adapting, making it a challenge for developers to stay ahead of the curve. They utilize advanced techniques including:

- Sophisticated CAPTCHA evasion: Traditional CAPTCHAs are increasingly ineffective against AI-powered bots.

- Proxy networks and IP masking: These methods obscure the true origin of malicious activity, making it difficult to trace.

- Automated account creation and management: Bots can create and manage numerous fake accounts, making detection challenging.

- Human-in-the-loop attacks: Combining automated processes with human intervention to bypass detection systems.

New Tactics for a More Secure Future

To combat these evolving threats, developers are adopting a range of new tactics:

1. Behavioral Biometrics: This technology analyzes user behavior patterns, such as mouse movements, typing speed, and scrolling habits, to identify anomalies indicative of bot activity. By focusing on how a user interacts with the platform, rather than just their IP address, behavioral biometrics provides a robust layer of defense.

2. Machine Learning and AI: Advanced machine learning algorithms can analyze massive datasets to identify patterns and anomalies indicative of malicious activity. These algorithms can continuously learn and adapt, improving their accuracy over time. This allows for proactive detection, rather than relying solely on reactive measures. Learn more about the power of AI in cybersecurity . (This is a placeholder link; replace with a relevant and authoritative source).

3. Decentralized Identification Systems: Exploring blockchain-based identity solutions offers a promising avenue. These systems can provide verifiable digital identities, making it harder for bots to create fake accounts.

4. Enhanced Rate Limiting and API Security: Implementing robust rate limits on API calls and other critical functions can help prevent automated attacks. Secure API design principles are also crucial for minimizing vulnerabilities.

5. Collaboration and Information Sharing: The fight against bots and boosters is not a solo effort. Increased collaboration among platforms and developers through information sharing and open-source intelligence initiatives can strengthen collective defenses.

H3: The Human Element: User Education and Reporting

While technological solutions are crucial, educating users about identifying and reporting bot activity is equally important. Simple steps like enabling two-factor authentication and being wary of suspicious links can significantly improve overall platform security. Providing clear and accessible reporting mechanisms empowers users to actively contribute to a safer online environment.

Conclusion: A Continuous Battle

The fight against bots and boosters is an ongoing process that demands constant innovation and adaptation. By combining advanced technologies with user education and collaboration, developers can create more resilient and secure online platforms in 2025 and beyond. Staying informed about the latest threats and evolving techniques is crucial for maintaining a safe and enjoyable digital experience for everyone. For more information on cybersecurity best practices, check out resources like . (This link is to a relevant external resource.)

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on /dev: Preventing Abuse: New Tactics To Ban Bots And Boosters In 2025. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Schatz Returns Confirmed World Of Outlaws Ride For Sprint Car Legend

Aug 17, 2025

Schatz Returns Confirmed World Of Outlaws Ride For Sprint Car Legend

Aug 17, 2025 -

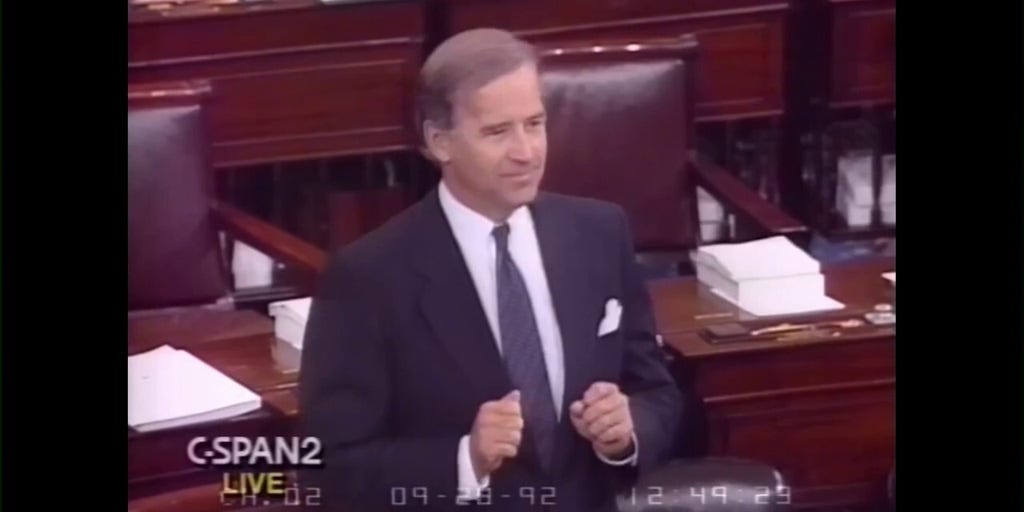

Dont Stop At A Stoplight Bidens 1992 Crime Concerns Revisited

Aug 17, 2025

Dont Stop At A Stoplight Bidens 1992 Crime Concerns Revisited

Aug 17, 2025 -

Preventing Abuse On Dev A Practical Guide To Banning Bots And Boosters For 2025

Aug 17, 2025

Preventing Abuse On Dev A Practical Guide To Banning Bots And Boosters For 2025

Aug 17, 2025 -

2025 Kbo Baseball Kt Wiz Vs Kiwoom Heroes Analysis And Predictions August 17th

Aug 17, 2025

2025 Kbo Baseball Kt Wiz Vs Kiwoom Heroes Analysis And Predictions August 17th

Aug 17, 2025 -

Bbc Quiz The Weeks Top Story Pasta Wars In Italy

Aug 17, 2025

Bbc Quiz The Weeks Top Story Pasta Wars In Italy

Aug 17, 2025

Latest Posts

-

Dev The Future Of Bot And Booster Mitigation In 2025

Aug 17, 2025

Dev The Future Of Bot And Booster Mitigation In 2025

Aug 17, 2025 -

Orixs Keita Nakagawa Two Run Homer Extends Buffaloes Lead

Aug 17, 2025

Orixs Keita Nakagawa Two Run Homer Extends Buffaloes Lead

Aug 17, 2025 -

Topshops High Street Return Challenges And Opportunities

Aug 17, 2025

Topshops High Street Return Challenges And Opportunities

Aug 17, 2025 -

Denmark Train Accident Tanker Collision Causes Derailment One Death

Aug 17, 2025

Denmark Train Accident Tanker Collision Causes Derailment One Death

Aug 17, 2025 -

Game Tying Blast Nakagawas Ninth Homer Leads Orix Buffaloes

Aug 17, 2025

Game Tying Blast Nakagawas Ninth Homer Leads Orix Buffaloes

Aug 17, 2025