AI Psychosis Concerns Rise, Prompting Response From Microsoft Leadership

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit Best Website now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

AI Psychosis Concerns Rise, Prompting Response from Microsoft Leadership

The rapid advancement of artificial intelligence (AI) has ushered in an era of unprecedented technological possibilities, but it also raises profound ethical questions. Recently, concerns surrounding the potential for AI systems to induce psychosis in users have surged, prompting a significant response from Microsoft leadership. This isn't just a niche concern; it touches upon the very fabric of how we interact with and trust technology in our daily lives.

The Growing Unease: AI and Mental Health

The emergence of sophisticated AI chatbots like ChatGPT and Bard has brought AI into the mainstream. While offering impressive capabilities, anecdotal evidence and preliminary research suggest a potential dark side: prolonged engagement with certain AI systems may trigger or exacerbate pre-existing mental health conditions, potentially leading to psychosis in vulnerable individuals. Symptoms reported include distorted reality, confusion, and a blurring of the lines between the virtual and real worlds.

This isn't about AI becoming sentient and deliberately causing harm. Instead, the concern lies in the persuasive nature of these sophisticated AI systems. Their ability to mimic human conversation and generate seemingly coherent (though potentially false) narratives can be incredibly powerful, potentially manipulating users' perception of reality. This is particularly concerning for individuals already predisposed to mental health issues.

Microsoft's Response: A Cautious Approach

Faced with these emerging concerns, Microsoft, a leading player in the AI development landscape, has responded with a mixture of caution and proactive measures. While the company continues to invest heavily in AI research and development, it has acknowledged the potential risks and pledged to prioritize user safety.

This response includes:

- Increased transparency: Microsoft is working towards greater transparency in its AI models, aiming to better explain their inner workings and limitations. This includes improved documentation and open communication regarding potential risks.

- Enhanced safety protocols: The company is reportedly implementing more robust safety protocols within its AI systems to minimize the likelihood of generating harmful or misleading content. This involves refining algorithms and integrating safeguards to detect and mitigate potentially detrimental interactions.

- Collaboration with experts: Microsoft is actively collaborating with mental health experts and researchers to better understand the potential impact of AI on mental wellbeing and develop strategies for mitigating risks. This interdisciplinary approach is crucial for addressing the complexity of the issue.

The Path Forward: Responsible AI Development

The concerns surrounding AI-induced psychosis highlight the critical need for responsible AI development and deployment. This requires a multi-faceted approach that goes beyond simply focusing on technical capabilities. We need:

- Ethical guidelines and regulations: The development and implementation of robust ethical guidelines and regulations for AI are paramount. These should address the potential risks to mental health and ensure user safety.

- Increased public awareness: Educating the public about the potential risks associated with prolonged AI interaction is essential. This includes providing resources and support for individuals who may be vulnerable to the negative impacts of AI.

- Continued research and monitoring: Ongoing research and monitoring of the effects of AI on mental health are crucial to understand the full scope of the problem and develop effective solutions.

The issue of AI-induced psychosis is a complex and evolving one. While the evidence is still emerging, the concerns are serious enough to warrant a proactive and cautious approach from developers, regulators, and users alike. Microsoft's response signals a significant step in the right direction, but much more work is needed to ensure that the incredible potential of AI is harnessed responsibly and safely. The future of AI depends on it. What are your thoughts on this rapidly developing situation? Share your opinions in the comments below.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on AI Psychosis Concerns Rise, Prompting Response From Microsoft Leadership. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

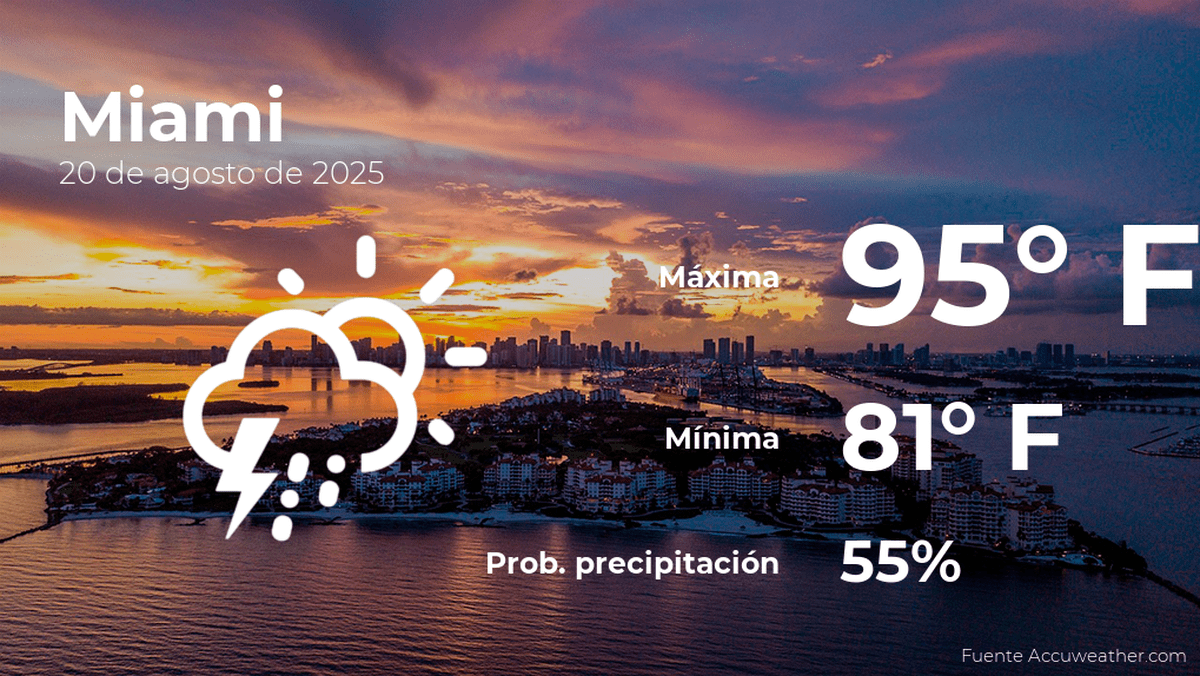

El Tiempo En Miami Para El Miercoles 20 De Agosto Prevision Completa

Aug 23, 2025

El Tiempo En Miami Para El Miercoles 20 De Agosto Prevision Completa

Aug 23, 2025 -

Interstellar Visitors Light Natural Phenomenon Or Alien Craft

Aug 23, 2025

Interstellar Visitors Light Natural Phenomenon Or Alien Craft

Aug 23, 2025 -

American League Wild Card Remaining Schedule Could Decide Playoff Teams

Aug 23, 2025

American League Wild Card Remaining Schedule Could Decide Playoff Teams

Aug 23, 2025 -

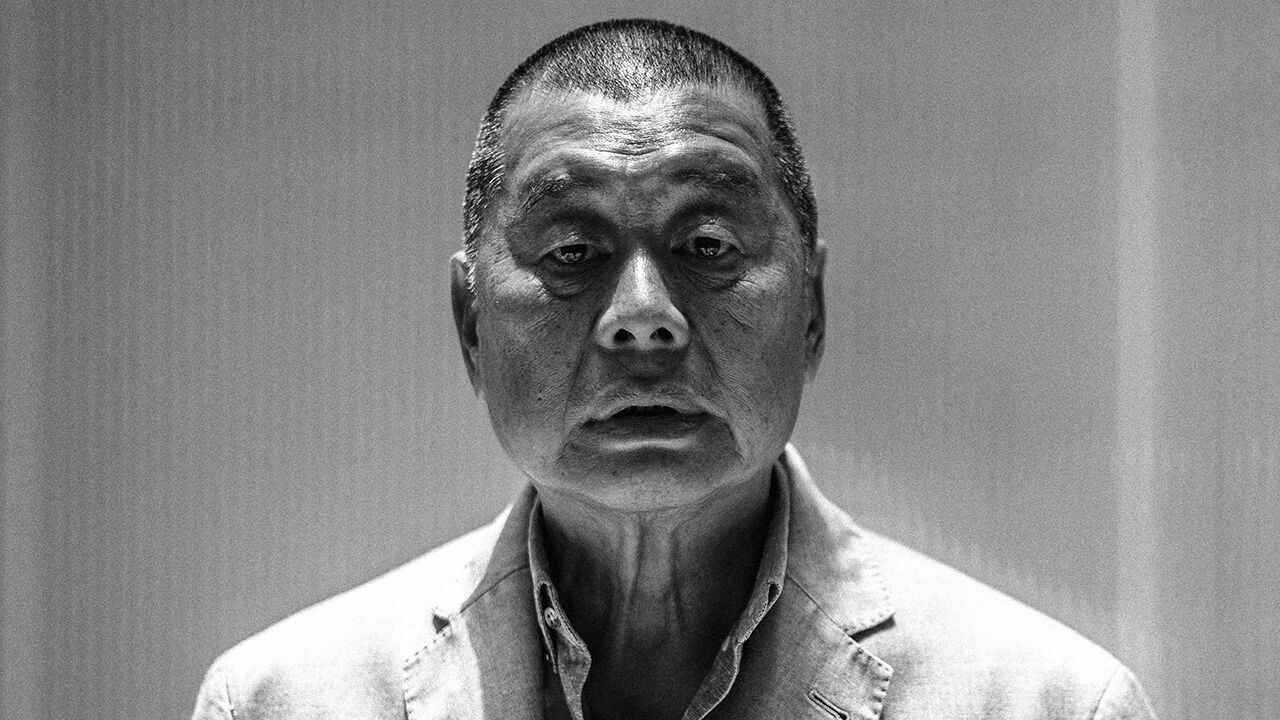

The High Stakes Of Hong Kongs Legal System Dramas In Court

Aug 23, 2025

The High Stakes Of Hong Kongs Legal System Dramas In Court

Aug 23, 2025 -

After Target Ceo Resignation Anti Dei Pastor Comments On Victory

Aug 23, 2025

After Target Ceo Resignation Anti Dei Pastor Comments On Victory

Aug 23, 2025

Latest Posts

-

Highlander Movie Reboot Gillan And Cavill Lead The Cast

Aug 23, 2025

Highlander Movie Reboot Gillan And Cavill Lead The Cast

Aug 23, 2025 -

Orlando Weather Forecast Stormy Weekend Predicted For Central Florida

Aug 23, 2025

Orlando Weather Forecast Stormy Weekend Predicted For Central Florida

Aug 23, 2025 -

Proposed Ukraine Land Concessions A Dangerous Gambit

Aug 23, 2025

Proposed Ukraine Land Concessions A Dangerous Gambit

Aug 23, 2025 -

2025 Nascar On Nbc Meet The Announcers Covering The Races

Aug 23, 2025

2025 Nascar On Nbc Meet The Announcers Covering The Races

Aug 23, 2025 -

Hypersonic Missile Technology A Growing Gap Between East And West

Aug 23, 2025

Hypersonic Missile Technology A Growing Gap Between East And West

Aug 23, 2025